Historically here at Anu, we’ve adopted a one size fits all model for our virtualisation platform, and adapted the size of virtual machines deployed to meet the performance requirements of individual customers.

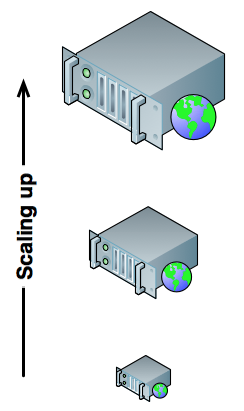

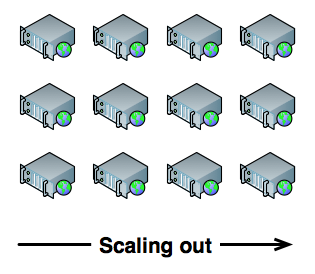

Depending on the application this could mean scaling up by allocating more CPU cores or memory to a single virtual machine, or scaling out using multiple virtual machines behind a load balancer. Both approaches were deployed on the same underlying hardware.

About 5 years ago, based on customer demand for faster I/O, we split our storage offering into 2 categories: Standard Storage and Fast Storage. Initially we used 15k RPM SAS RAID arrays for Fast Storage, these days it’s all SSD.

As the years have gone by, we’ve purchased increasingly bigger and faster servers to keep pace with customer demand for faster and faster performance. A side effect of this is that we can no longer fill an entire 46U rack with servers, as our datacenters cannot provide enough power and cooling density. This has led to wasted space in the colocation cabinets, increased spending on hardware, which results higher per-unit costs for our underlying infrastructure. In most cases this is fine, as revenue from bigger customer deployments has increased in line with cost.

However there are two cases where scaling up doesn’t work. One is the small customer who wants to keep costs low or only requires a small server for a task that is not resource intensive. Deploying such a customer on expensive hardware can be cost prohibitive. The second case is for customers who have designed their apps from the outset to scale out rather than scale up, as is increasingly the case for sites and apps which need to handle a large number of concurrent users. This type of app scales much better by adding more servers rather than by increasing the speed of the individual servers.

Simply splitting I/O into two categories is no longer enough: we need a faster, cheaper, more flexible approach.

After much debate, we decided to do a test deployment of Intel’s Atom server platform. The individual boxes are 1U servers with 4 cores @ 2.4GHz, 16GB RAM and 2 x 1TB SATA drives.

Instead of the 380W peak power draw per server that we see on our 16-core Xeon E5-2637 v3 @ 3.50GHz boxes, the Atom servers draw a measly 20W peak power. A quick CPU benchmark to calculate 20,000 prime numbers yielded exactly 50% of the per-core performance. Even taking into account the reduced core density, we get 50% of the performance for 20% of the power draw: we’re happy with that.

Managing a large pool of small servers presented another new challenge: how to manage storage. We discussed many options such as centralised NAS or local RAID as we run on most of our other boxes, but neither offered the flexibility, scalability and price competitiveness we wanted to achieve with these new servers.

In the end we decided to give GlusterFS a try. Gluster uses the local SATA drives as “bricks” and constructs a distributed, replicated, highly scalable filesystem that is accessible from all the servers simultaneously. This means we can live migrate VMs to balance load dynamically with no downtime, we can scale filesystems beyond the 1TB physical drive size limitation, and when we’re low on storage all we need to do is add more bricks. It also doesn’t require any specialised hardware or network gear. The only question we had is how it would perform in real life. Our first test cluster consisted of 3 bricks, using 3 x 1GbE bonded Ethernet as the transport (the servers had 4 NICs built-in, we reserved 1 for segregated public network traffic). Performance is approx. 60% of what we get from our traditional Standard storage (7.2k RPM SATA RAID arrays) and just 6% as fast as the SSD-RAID in our Fast Storage arrays. We have, however, been testing the new deployment with 13 small production VMs, and it is holding up well with no warnings from our monitoring system about high I/O load.

Last week we racked up another 2 Atom servers to add to the pool. We want to do some further testing to see how the system scales under load, but initial results are very promising.

If you have any comments, questions or if you’d like to deploy a horizontally scalable site, please do not hesitate to get in touch.