Update 19:35 BST: the upgrade has been completed successfully. We have done extensive testing to ensure maximum backwards compatibility with the old mail server including all supported protocols and authentication methods. We are monitoring the new system carefully for any problems or warnings. If you have any difficulties accessing your email please do not hesitate to contact support.

Update 19:00 BST: we are going to start the migration to the new mail server shortly. We expect a disruption in access to POP3, IMAP and SMTP services to last approximately 30-45 minutes. Any mail sent to you during this time will be queued and delivered to your mailbox when the migration is complete. We’ll post an update here on completion.

Update 2016-09-09 07:30: We plan to go live with the upgraded mail server tonight around 19:00 BST.

Update 00:05 CET: Due to unforeseen circumstances (a software incompatibility on the new system) we have had to postpone the upgrade of our POP3/IMAP service. We’ll run further tests tomorrow and reschedule the upgrade once we’re happy the issue has been resolved.

Update 23:40 CET: Upgrade is currently in progress. We’ll update here when complete.

Update 2016-09-04 09:00: We’re ready to go with the anticipated performance upgrade of our POP3/IMAP service. Unfortunately this does require that we temporarily shut down the live server to synchronise changed data over to the new server. This will happen late tonight (Sunday 4th September).

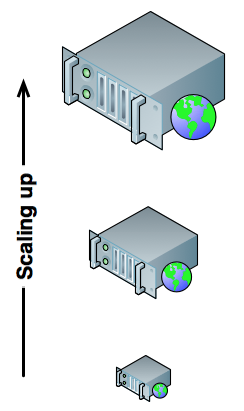

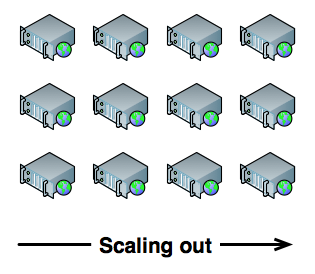

Update 2016-08-24 10:30: The server has been stable overnight and this morning. We can see that all services (IMAP, POP3, webmail etc) are functioning normally for most clients. We have had no new email complaints regarding the outstanding issue above today. The changes we made to the mail server configuration have made a significant impact in lowering the load – that said we have pushed the upgrade of the mail server up in our priority list and expect the hardware to be at our datacenter by the end of August and for the mail server to be upgraded early September.

Update 16:00: We have made some changes to the mail server which have brought the load down drastically – things should be functioning reasonably well now. We are keeping an eye on things to ensure this remains the case.

We have become aware of problems with our mail server over the past few days relating to IMAP / POP3 connections. This will also affect webmail as that connects to the server via IMAP.

This is being caused by high load on the server. We are currently investigating the reason behind it.

We have a major upgrade to our mail server planned in September which we will put up on our blog soon. This will give the server a boost in speed and performance and should eliminate many of the issues that we have faced recently.